Shanghai from UC Berkeley, UCLA, Stanford, Caltech and other internationally renowned universities in the United States is committed to introducing AI technology into traditional hearing aids, deeply engaged in the development of deep learning algorithms and the research and development of intelligent medical fields, and creating a world-class brand of intelligent hearing aids and health technology.

Link to the original paper:

http://www.ennohearingaid.com/blog-detail-147.html

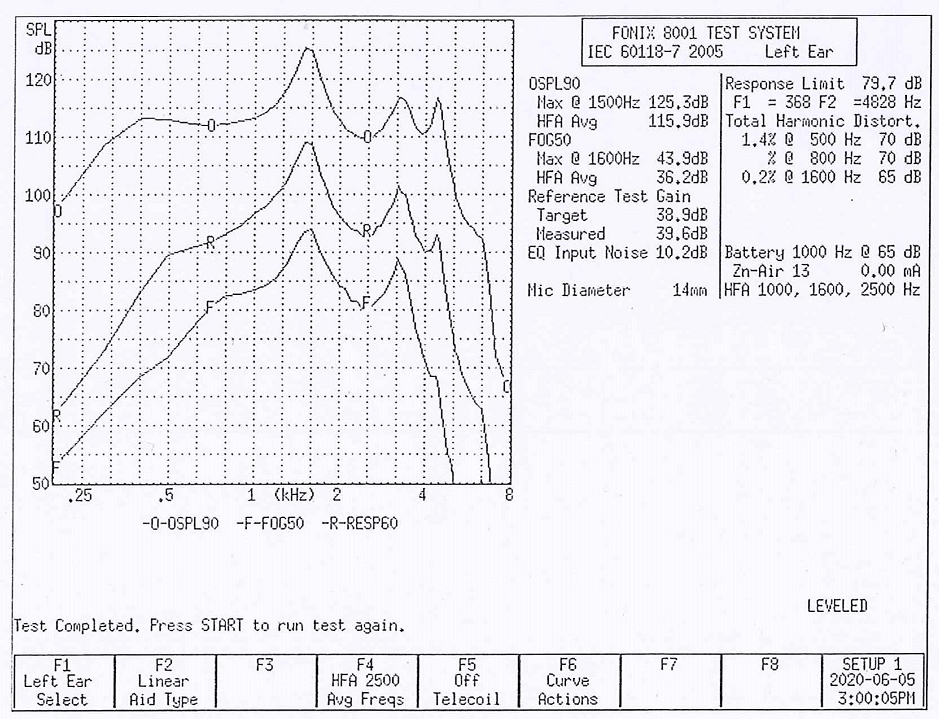

Audiograms are often used to describe people's ability to hear. Through audiograms, audiologists can recommend and adjust appropriate hearing aids for people with hearing loss.

Since the US FDA released the OTC hearing aid proposal, the consumer hearing aid product industry has become increasingly popular. It is foreseeable that hearing aids without professional audiologists will rely more on automated fitting processes to provide users with better hearing aids.

Therefore, audiogram automatic recognition is designed to help hearing aid manufacturers identify hearing data faster and more conveniently without the need for manual intervention.

It is worth noting that Apple also mentioned in the preview of the new version of the system in May last year that audiogram recognition will be added to the future release of iOS systems. However, Apple's system is basically closed source, there are restrictions, and the intelligent open source database and model are more conducive to peer brainstorming and jointly promoting the progress of the industry.

Audiograms are automatically sorted

In the past, there has been some work to automatically classify audiograms, and these models can determine whether a user has conductive hearing loss, sensorineural hearing loss, or mixed hearing loss based on audiograms.

But they were unable to extract the complete information of the audiogram, i.e., the user's hearing level at different frequencies, which was extremely important for hearing aid fitting. Therefore, these models do not apply to hearing aid fitting.

Research and development of audiogram recognition

Compared to the audiogram classification task, the task of audiogram recognition requires the extraction of complete information from the audiogram, which is more challenging.

To this end, the researchers created a dataset of 420 audiograms of audiograms taken in various environments and proposed a data setBaseline multi-stage audiogram recognition network (MAIN).

The model first identifies the chart area, then predicts the perspective distortion of the chart area and corrects it.

On the corrected image, the model reads the audiovisual data by positioning audiospeed symbols on axes, scales, and polylines.

(Example of 420 audiograms.) For example, some have projections of other objects, some have obstructions such as signature pens, and some hearing drawings are distorted and folded. )

Three stages of audiogram recognition

The researchers designed a multi-stage audiogram recognition network, which is the first network structure that can obtain complete information about the audiogram, that is, to obtain the degree of hearing loss on each frequency of the user.

Specifically, the network can be divided into three phases: audiogram detection, perspective correction, and axis and symbol detection.

# Phase 1 #

Since the input images may contain background information other than the audiogram, the researchers first used Faster-RCNN to test the audiogram as a whole and take a screenshot. This step eliminates other interfering information in the input picture and facilitates subsequent identification steps.

# Phase 2 #

In order to improve the accuracy of the recognition results, the researchers proposed two methods to solve the perspective distortion problem

Perspective distortion is a situation that often occurs in photos. Due to the perspective problem, in reality parallel straight lines may not be parallel on the photo.

For audiogram recognition, perspective distortion can cause the border of the audiogram to no longer be parallel, resulting in a decrease in the accuracy of the reading.

To this end, the researchers proposed two methods for fluoroscopic correction, one is based on the method of line detection, and the other is based on the Method-RCNN method.

# Phase 3 #

In order to obtain the degree of hearing loss at each frequency, the researchers first examined the coordinate axis of the audiogram.

They used Faster-RCNN to detect the scales of the frequency axis and the loudness axis of sound, and then used the RANSAC algorithm for their positions and values, respectively, to exclude erroneous detection results in the spatial and numerical dimensions, and to fit the corresponding coordinate axes.

The researchers then used Faster-RCNN to detect hearing loss symbols at various frequencies in the audiogram.

Finally, they will be each character the sign is projected onto the two fitted axes and reads to obtain t he frequency and hearing loss corresponding to the symbol.

(The audiogram recognition network is divided into three stages: audogram detection, perspective correction, coordinate axis and symbol detection.)

How the results of the audiogram recognition are.

The researchers trained and tested on their open source dataset. And on this basis, the final results of audiogram recognition were tested in detail and the accuracy of hearing loss recognition, frequency recognition accuracy and overall recognition accuracy were counted.

The results show that the method proposed by the researchers can reach 86% for hearing loss recognition, 96% for frequency recognition, 84% for overall identification, and the error between 95% of the data and the true value is not more than 5dB HL.

In addition, the researchers tested on 30 scanned audiograms and got excellent results. The results showed that the method proposed by the researchers achieved and accuracy rate of more than 98%.

Summary

The researchers have introduced the first dataset designed for audiogram recognition as a standard for evaluating audiogram recognition models.

In addition, they designed a multi-stage audiogram recognition network, the first stage completed the audiogram detection, the second stage for reading the map.

The researchers said that although the system can fully automatically complete the recognition of the audiogram, the recognition accuracy, especially for the complex bone conduction and air conduction results are mixed together, still needs to be further improved.

At the same time, due to the use of multi-stage computer vision.